Despite being 2021, sexism remains highly prevalent in our daily lives.

But as it turns out, sexist issues arise even in places we wouldn't expect, such as in electronic tools many of use on an almost daily basis.

Dora Vargha, a Senior Lecturer in Medical Humanities at Exeter, recently performed an informal experiment with Google Translate, testing to see how sexist its programmed assumptions are when translating from language to language.

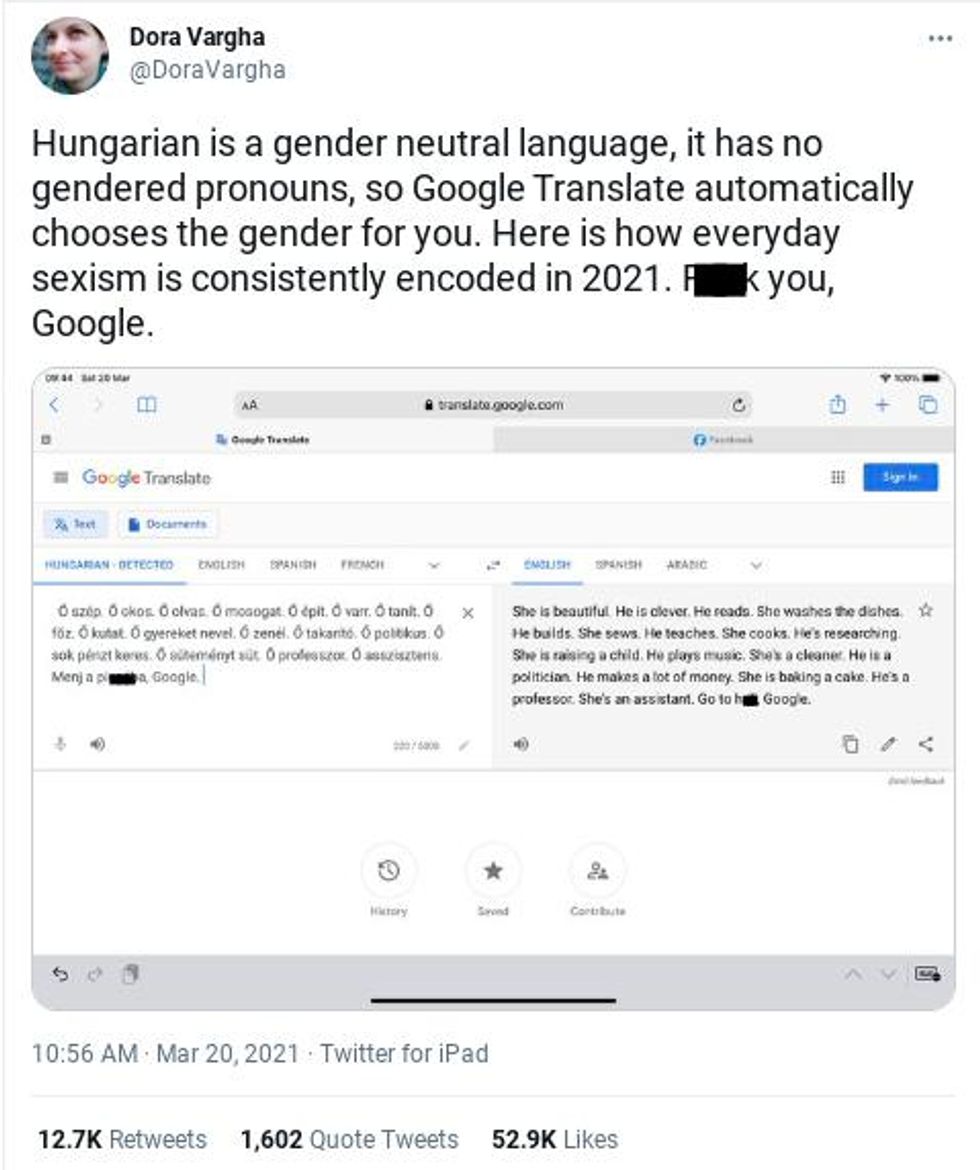

Vargha shared her results, and disappointment, on Twitter:

Vargha focused on the Hungarian language, which is a gender-neutral language.

Nouns are not assigned a "masculine" or "feminine" role, and sentences do not inherently assume whether the subject of the sentence is male or female.

Vargha wanted to see what would happen if several simple Hungarian sentences were translated into a language that does emphasize gender pronouns, such as English.

The results were worse than Vargha expected.

Not only were pronouns inserted into each of Vargha's gender-neutral sentences, but the assignments showed a pattern that perpetuates sexist stereotypes.

Here are a few of the highlights:

"She is beautiful. He is clever."

"She washes the dishes. He builds."

"He's researching. She's raising a child."

"He is a politician. He makes a lot of money. She is baking a cake."

All sentences that focused on beauty, domestic activities (such as washing dishes and baking), and childcare were applied to the "female" (she) pronoun.

All sentences that focused on the workplace, progressive work, and making money were applied to the "male" (he) pronoun.

The reactions to this experiment were mixed.

Some started splitting hairs, stating the issue was with the AI's "learning" algorithm, not with Google.

To be fair it's not Google's fault. It's using a statistical language model build from data scrapped from the web. There are huge efforts in tha machine learning community to degender biases like this, but it's not easy.

— Léa 🇫🇷 (@la_lea_la) March 20, 2021

Great example of how everyone thinks algorithms are neutral and will fix human biases but actually they reinforce and amplify them. Invisible Women by Caroline Criado Perez has a lot of data about this in, for example, hiring

— Dr. Stephanie Lovett (@uffishthot) March 20, 2021

Others argued the issues stemmed from societal norms and how they impact our languages.

The sexism isn't in Google, it's in our society.

— Kali Ravel (@KaliDoesGenes) March 20, 2021

But some pointed out defending Google at a time like this would only perpetuate the problem.

Disgusted but not surprised. I tried it in Spanish, which is a very gendered language, but I used the neutral 3rd person forms that don't use the pronoun at all) and every single one translates as male, women don't exist at all! Not sure if this is worse or better...😅 pic.twitter.com/ejjz4nk9V1

— MHMB🏴🇪🇸🇮🇪🇪🇺🏳️🌈🏴 (@M_Bizquits) March 20, 2021

Though the reactions were mixed, everyone could agree gender stereotypes, as well as clinging to a gender binary, is the real problem.

Other languages communicate without gender and English can too by using singular they. Removing gender from language actually helps removes some biases we're teaching the next generation of communicators.

Now, if Google can keep working on its algorithms, and make it so users can select their preferred pronouns, then at least we'll be one more step in the right direction.

COMICSANDS

COMICSANDS percolately

percolately georgetakei

georgetakei secondnexus

secondnexus george's picks

george's picks